Wednesday, 10 October 2018

Requirements testability is an important concept when designing tests that ensures all requirements have been delivered and match the specification. Requirements can be documented in such a way to dramatically increase the speed of test case design, while removing ambiguities at this vital stage of the software development lifecycle.

What do we mean by Testability?

The international standard IEEE 1233-1998 describes “testability” as the “degree to which a requirement is stated in terms that permit establishment of test criteria and performance of tests to determine whether those criteria have been met”.

Businesses often see “testability” in relation to the overall characteristics of a system and the ability to demonstrate the extent to which these (functional and non-functional aspects) “work” or “don’t work”.

It can be argued that testability is closely related to a tester’s ability to carry out their job, which (ultimately) is to demonstrate clearly, unambiguously, and cost-effectively whether requirements are met. Something of a challenge as it’s common for there to be a difference between “requirements” (what’s actually needed) and “specifications” (what’s being asked for). How often are specs plain “wrong”, exhibiting some of the following “mistakes”:

- Noise: Text containing no information relevant to any aspect of the problem

- Silence: A feature not covered by any text

- Over-specification: Description of the solution rather than of the problem

- Contradiction: Mutually incompatible descriptions of the same feature

- Ambiguity: Text that can be interpreted more than one way

- Forward reference: Referring to a feature not yet described

- Wishful thinking: Defining a feature that can’t be validated

- Weak phrases: Causing uncertainty (“adequate”, “usually”, “etc.”)

- Jigsaw puzzles: Requirements distributed across a document and then cross-referenced

- Duckspeak: Requirements only there to conform to standards

- Terminology invention: “user input/presentation function”; “airplane reservation data validation function”

- Putting the onus on developers and testers: to guess what the requirements really are.

Testers have a role to play in making sure what’s been specified is what’s needed such that there is a genuine “requirements specification”.

Testability – Accidental or by Design?

The extent to which a tester is able to demonstrate whether requirements are met by a system is highly dependent on more than just the skill and ability of the tester; it relies on how soon testers are involved and the level of tester engagement in the product life cycle.

Testability is not accidental but has to be built in, the sooner the better! The optimum time is during the creation or definition of requirements; building it in should continue throughout architecture design, software design and coding.

Developers normally rely on a requirements spec (which could be a collection of user stories) to help define what the system should do. Similarly, a tester will use a requirements spec to develop test cases. A key characteristic of a “specification” is that if it’s to be a “specification”, it has to be specific. Sounds obvious, well maybe, but remember, business software is often not based on genuine business specifications, but on “business wafflings”.

A tester’s job suddenly becomes more difficult if specifications are unclear, ambiguous or missing; if they don’t reflect actual requirements; or if they can only be tested at an unacceptably high cost. Of course a testability review would look out for those possibilities.

Whilst some requirements may be verbal, documented in a spec, written as a user story, embedded in a collection of emails, others may not be stated at all and will rely on the skill of the development staff and test team to ensure these are tested and not overlooked.

“Non-functional” requirements are frequently assumed rather than written. Users expect an app to launch and run each time they use it though “reliability requirements” may not even have been specified. Businesses tend to expect new features to be added to a system and tested cost effectively. They assume that the software is actually maintainable without anyone stating “maintainability” as a goal (ie, the system is required to be “analysable, changeable, stable and testable”). Smartphone users will delete apps that drain the battery in a short time but what chance this “resource utilisation” requirement (efficiency) is in any of the specs?

Life Cycle Models, Requirements and Role of Testers

Software development lifecycles that engage testers at the start and throughout provide a better chance of enabling projects to deliver a product that “testing can show meets customer requirements”.

Sequential lifecycle (Waterfall or V Model) approaches must engage testers during the requirements stage such that the foundations for testability are established early on else risk the chance of implementing a system which doesn’t work “as expected” and cannot be tested economically!

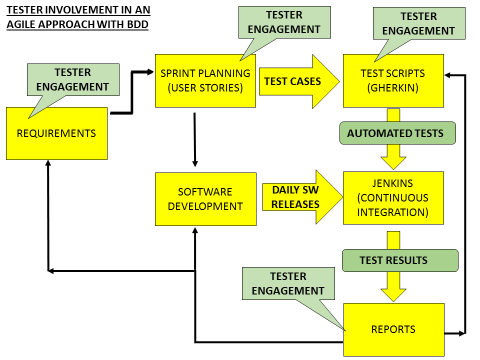

With an Agile approach testability can be built in more readily because of the shorter lifecycles, early tester involvement, early and frequent customer feedback plus the all-important responsiveness to change. This is further enhanced when a Behaviour Driven Development (BDD) approach is used because of the strong focus on the customer and software behaviour.

Regardless of lifecycle, testers attending requirements reviews can provide guidance on how clear, specific and unambiguous a requirement is (or not). Simply challenging assumptions during reviews, highlighting non-functional characteristics and asking the question “how will this feature be tested” should result in more requirements that are testable.

Business Analysts and Software Developers directly benefit from tester guidance in “getting requirements right”. A spec which says “performance should be adequate for users” is not testable as it stands. No indication is given as to what’s meant by performance; it could mean amongst other things, UI responsiveness, elapsed time for system to write to a backend database, screen refresh time, throughput of data or time to carry out a specific transaction. Not just that, we also need to know the number of concurrent users which “adequate performance” applies to plus who these users are (in what role will they be users of the system). Such a performance requirement needs breaking down into throughput, capacity or response time as appropriate and required values assigned before designing the architecture.

Testers can help identify and clarify both functional and non-functional needs to ensure what’s defined is:-

- Complete

- Correct

- Feasible

- Necessary

- Prioritised

- Unambiguous

- Verifiable

- Consistent

- Traceable

- Concise

As a result, suitable test cases and software can be generated economically based on these needs and in a way more likely to result in a satisfied customer (not forgetting an “accepted system”).

Acceptance testing of the system is holistic in the sense that users perform end to end tests on a system whilst also considering usability and performance elements.

Tester Skills and Training

It is worth mentioning skills and training. On the Certified Mobile Apps Software Testing Foundation course, some testers have said that (before the course) they “did not actually do mobile testing, they just thought they did”. Mainly because what they did was essentially “functional” feature testing on a mobile device. The tests were pretty much the same as those run to test a PC App.

On finishing the course, the same delegates stated they felt more knowledgeable about mobile user needs. For example, becoming aware that “usability testing” is much more significant in the mobile world than for most PC based apps. These testers could now ensure a focus on usability very early on in development. In fact, in the mobile arena, it should be considered before any software design takes place due to everything from smaller screen sizes, variable screen resolutions, and limited data input capability via soft keyboards to the zero tolerance attitude of Smartphone users when using the application.

The Agile Approach

Experience suggests that projects which engage testers at the start and throughout the development cycle provide a greater chance of enabling delivery of a product which by testing can be shown to meet customer needs. An Agile approach does exactly this.

Shorter life cycles with frequent releases, early feedback and close involvement of business users and testers in the team provide for something closer to what the business requires. An Agile approach ensures there are numerous touch points for testers to ensure user stories are testable especially when helping the business define suitable acceptance criteria.

Achieving greater clarity in the specification of “testable requirements” can be further enhanced by the use of Test Driven Development techniques, where developers write code to pass tests already written (and influenced by the testers in the team). These tests effectively become the requirements, though it can be argued this is still somewhat focused on “what is being asked”. A further improvement is to adopt the next logical step – a Behaviour Driven Development approach (BDD).

Behaviour Driven Development and Testability

The great thing about Behaviour Driven Development (BDD) is that it shifts the focus back on to the customer such that what is captured is the behaviour as required by users of the system and its features using human readable stories.

BDD can create a new way of thinking and if rigorously applied forces definition of the “why” when creating a feature. A key point in BDD, when defining a business feature, is for the ‘”three amigos” (business owner, developer and tester) to identify the business value. Herein lies a great opportunity for testers to really push back and change the mindset of those involved in defining requirements (as often the mindset is narrowly focused on defining the “what”).

Without going into detail on BDD (as much is already written and available), a feature is created and described in a feature file by the business. The follow-on work to create scenarios, particularly by the tester, or real examples of the “feature in use” is a powerful approach to ensuring the business, developers and testers achieve clarity on what is being asked for, why it is being asked for as well as business, developer and tester perspectives on the feature. Tester scenarios (captured in the feature file) can bring a new perspective to the feature – one of testability, in essence a feature file which can be viewed as a “specification by examples in action”.

Epics and Stories can then be generated and test cases produced. The test cases can then be auto-tested against the application. It’s worth mentioning here that often, the challenge is getting to detailed test cases from the feature files when automating.

It must be pointed out that test cases do not have to be automated. In some cases automation has been the “Achilles Heel” of the project, with test managers spending large sums on an automated approach only to find that creating the automated test cases takes more skill and effort than originally envisaged in the initial euphoria to go down the BDD route. Sadly some then take the view that “BDD doesn’t work”, based on automation related issues and merely viewing BDD as a tool.

Although BDD enables the creation of tests which the business can understand, it also means testers need to be skilled in using a BDD environment if automation is adopted. Testers are required to write test cases in a domain specific language such as Gherkin (a common language supported by a BDD framework).

On one of our projects (Agile type lifecycle using BDD) some key tester engagement points where testing were able to assess, assist and help define /refine requirements were:-

- Feature definition and scenarios / examples

- Initial user story creation with the information captured in a “feature file”

- Acceptance criteria definition with the business prior to design

- When creating tests for user stories (including functional and non-functional elements) to meet the acceptance criteria

- When business users reviewed and modified a feature and acceptance criteria which resulted in testers modifying the tests by changing or adding ‘scenarios’ to the file

- After test execution when test results were generated and checked.

This project was run in a highly automated environment. The tests created using Gherkin ran in an open source development framework (BEHAT). Frequent software releases were generated by the development team using a continuous integration system (Jenkins-based). The whole process was automated, thus early and frequent feedback on test scenarios was provided via reports enabling adjustments to the code, tests and if necessary user stories. The net result was a testable system which closely met the needs (of the different user types) delivered on time and within budget.

Conclusion

It’s not surprising that almost everything that testers need in a specification in order to be able to do their job is also stuff that developers will need to do their work, remembering that what’s being asked for should meet user needs (i.e. what a business actually requires).

So it really all starts with the requirements. Engaging testers early on is critical to ensuring the correctness of specs. This message has to be taken on board by project managers and development managers (assuming test leaders are already aware).

When requirements are “untestable”, testers (and the business for that matter) have limited confidence that they (the testers), developers, and the business all have the same interpretation of the spec. The result, a recipe for misunderstanding between groups, incorrect code being written, difficulty in test design and test creation.

Many projects have and will continue to treat testing as the “final quality check” (ie, a little Quality Control but no Quality Assurance). When such an approach is adopted, do we expect to see a system which fails to meet a user’s needs or cannot be tested? Not necessarily, as systems can always be tested but at what cost and what impact to the business?

Our experience of projects where “testing” was engaged late on has normally resulted in major project overruns in terms of time and budget (by at least 50%), significant “rework by the development team“ and delayed payment from a somewhat weary and disgruntled customer!

Agile lifecycle models do afford greater involvement of testing, hence the opportunity to improve overall “testability”, provided lessons are learned from the earlier sprints or releases.

Behaviour Driven Development goes a long way towards aligning what is specified (what’s been asked for in a spec) and customer requirements (what’s actually needed) and in a way that can be demonstrated by running test cases. Testers will require interpersonal skills to pro-actively influence projects early on as well as technical skills to work in an Agile and BDD environment.

The level of automation clearly impacts the demand for a higher skillset from testers. Test managers will need to be cognisant that an automation approach will take time, skills development, process changes and not just money. This is true of any type of automation regardless of whether using an Agile approach with BDD or not.

Finally, what is the key to success in achieving requirements testability?

In essence, test teams have to move up the value chain by developing skills in working with and assisting the business early on. Testers need to develop skills in writing test cases in domain specific languages plus increase their knowledge and experience of testing in different development environments (including Agile).

Alongside this, appropriate product knowledge is necessary, including testing products used in the mobile world (if necessary). Success can only be ensured if the above is fully supported by managers (project, product, development and test) willing to embrace methods such as BDD.